Interactive Sound Design

NOTE: To provide interactive web-based examples, the following use the Web Audio API.

Retro Shooter

Sample-based approach for incorporating audio assets and SFX in a game environment.

LEFT: left | RIGHT: right

SHIFT: boost | SPACE: laser

Stochastic Drums

Sample-based approach using user interaction to create and modify in real-time drum patterns.

Audio Controls

Sound Interface

HiHat Sequence

Snare Sequence

Kick Sequence

Instructions

1. Turn the audio context on by pressing the green button in the Audio Controls.

2. Type a sequence of probabilistic weights for hihat, snare, and kick drum.

A sequence must consist of values between 0 and 1, separated by a comma.

For example:

0.1, 0.5, 0.7

To play fixed patterns, use sequences of 1 and 0.

For example, a Cuban tresillo would be 1, 0, 0, 1, 0, 0, 1, 0

3. Use the blue and the red buttons to pause/resume and stop the audio context, respectively.

NOTE: the system starts with some default values for the three sequences, as a working template.

- hihat = 0.5,0.5,0.5,0

- snare = 0,0,0.5,0

- kick = 0.5,0,0,0.6

Additive Synthesis

Real-time sound synthesis example with filters and parameterization.

Audio Controls

Sound Interface

filter

volume

Instructions

1. Turn the audio context on by pressing the green button in the Audio Controls.

2. Click on the main button to generate a tone and use the filter slider to change the resonant frequency.

3. Use the blue and the red buttons to pause/resume and stop the audio context, respectively.

Data-Driven Sound Design

3D Audio & Parameter Mapping

Auditory display of coral bleaching data

Reef Elegy is an auditory display of Hawaii’s 2019 coral bleaching data that uses spatial audio and parameter mapping methods. Selected data fields spanning 78 days are mapped to sound surrogates of coral reefs’ natural soundscapes, which are progressively altered in their constituent elements as the corresponding coral locations undergo bleaching. For some of these elements, this process outlines a trajectory from a dense to a sparser, reduced soundscape, while for others it translates moving away from harmonic tones and towards complex spectra. This project has a dedicated website. Below, two examples from the prototype version described here.

Example #1: synthesis-based

Example #2: sample-based

Artificial Neural Networks

Auditory display of Tokyo's air temperature data

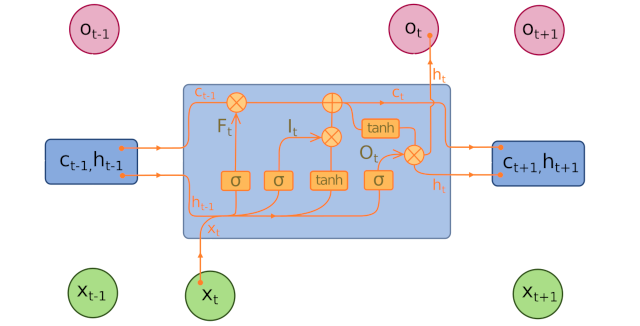

Tokyo kion-on is a query-based sonification model of Tokyo’s air temperature from 1876 to 2021. The system uses a recurrent neural network architecture known as LSTM (see below) with attention trained on a small dataset of Japanese melodies and conditioned upon said atmospheric data. Find more details here. Below, examples of melodies generated using as query different years & seeds:

Tokyo, 1876: seed=A4 quarter note

Tokyo, 1980: seed=A4 quarter note

Tokyo, 2021: seed=empty

Sonification Techniques Study

Auditory display of motion capture data

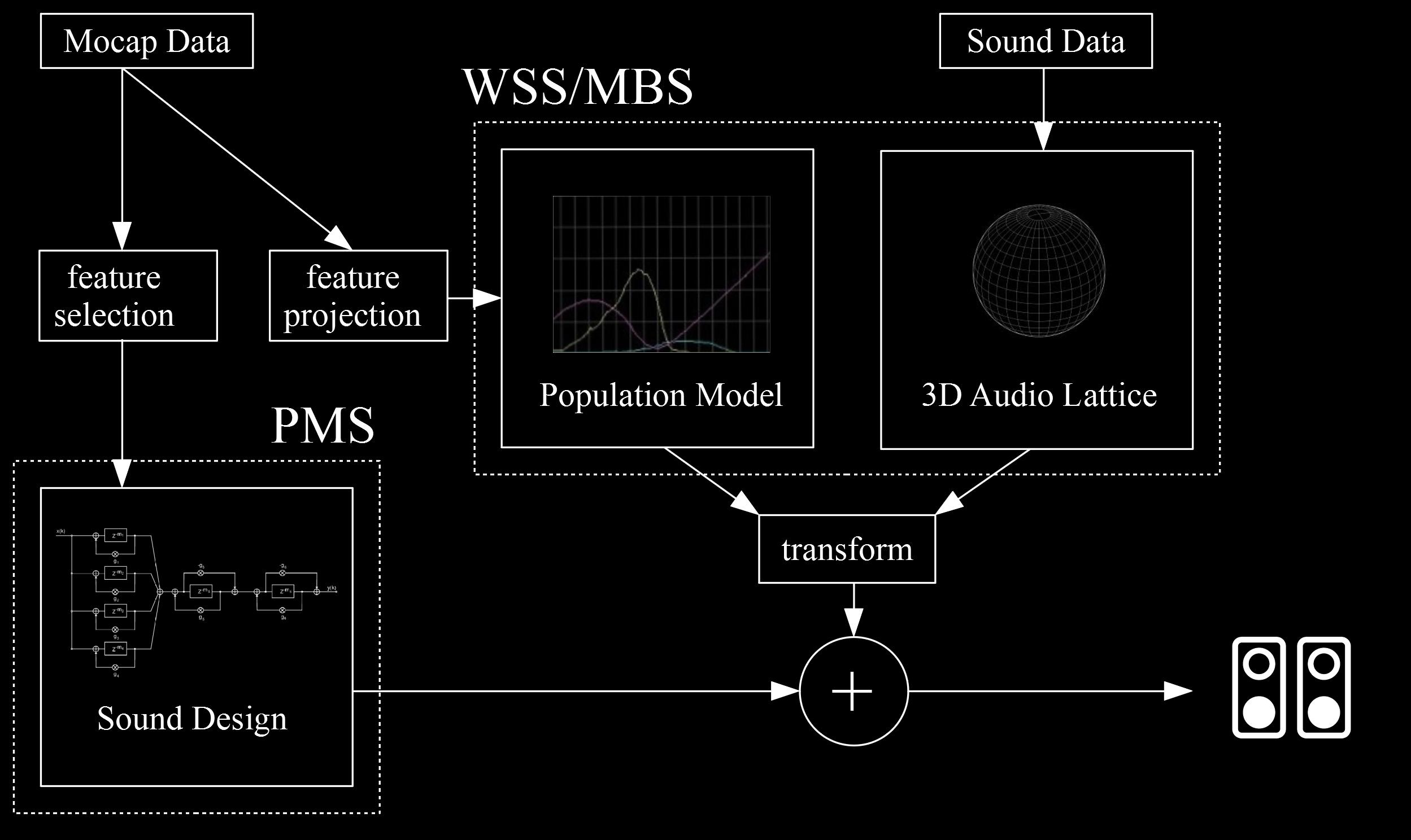

A study on the notion of surrogacy, originally described by Dennis Smalley as remoteness between source and sonic gesture. Here, surrogacy is extended to physical gesture, for the rendering of contemporary dance performances into abstract audiovisual compositions/objects, using motion capture data. The sonification process also employs the idea of mutualism and acoustic niche. Read more about it here.

Below, different levels of audiovisual surrogacy (from low to high order).

Level 1

Level 2

Level 3